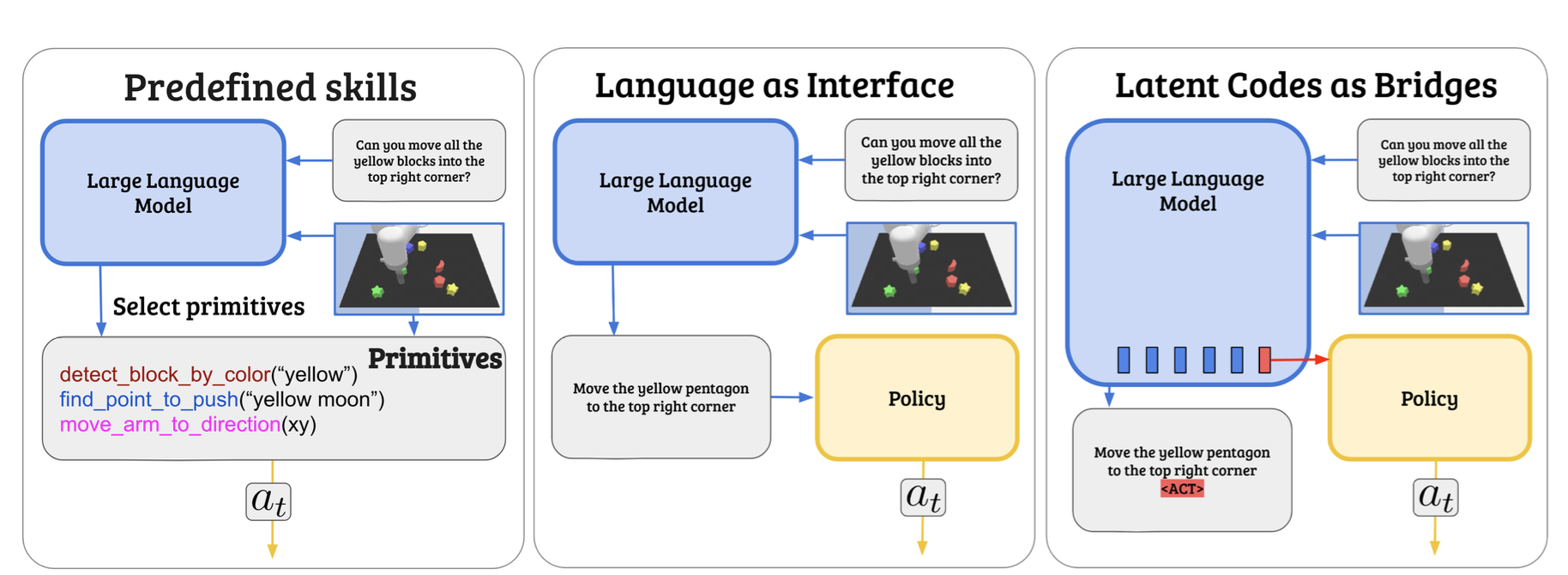

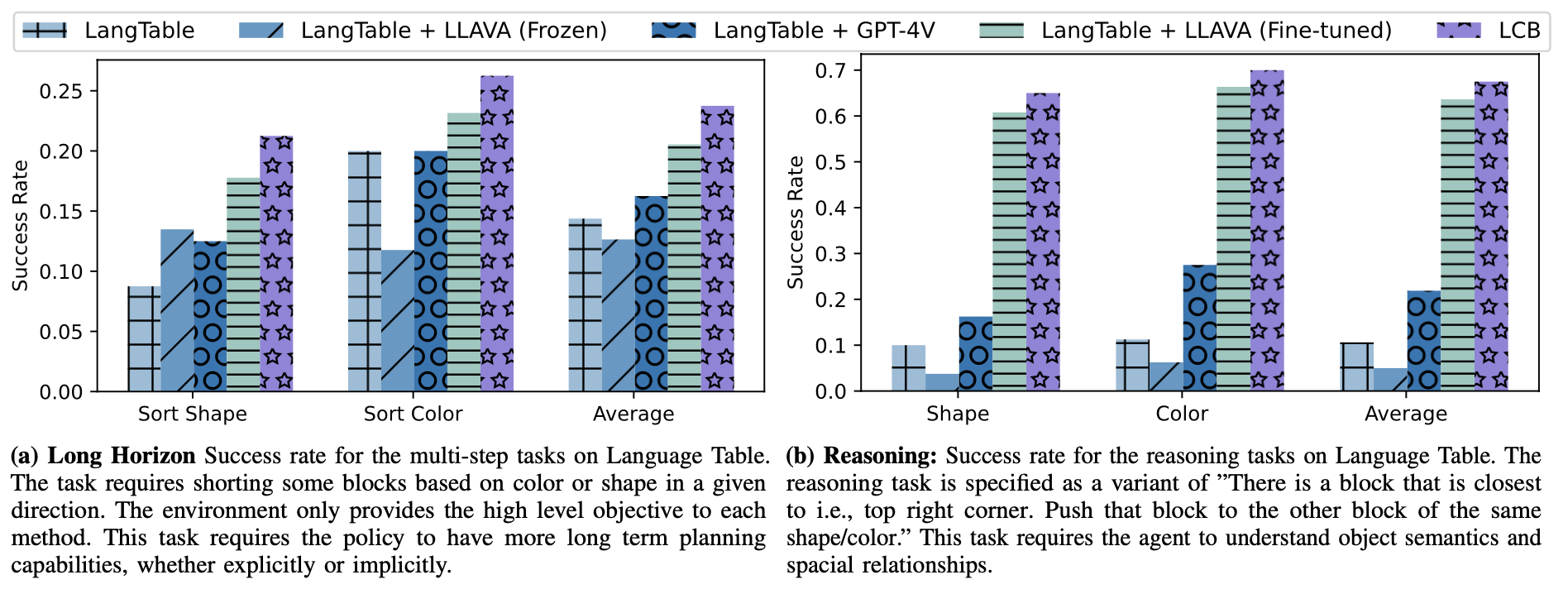

Hierarchical control for robotics long been plagued by the need to have a well defined interface layer to communicate between high-level task planners and low-level policies. With the advent of LLMs, language as been emerging as a prospective interface layer. However, this has several limitations. Not all tasks can be decomposed into steps that are easily expressible in natural language (e.g. performing a dance routine). Further, it makes end-to-end finetuning on embodied data challenging due to domain shift and catastrophic forgetting. We introduce our method - Learnable Latent Codes as Bridges (LCB) - as an alternate architecture to overcome these limitations. LCB uses a learnable latent code to act as a bridge between LLMs and low- level policies. This enables LLMs to flexibly communicate goals in the task plan without being entirely constrained by language limitations. Additionally, it enables end-to-end finetuning without destroying the embedding space of word tokens learned during pre-training. Through experiments on Language Table and Calvin, two common language based benchmarks for embodied agents, we find that LCB vastly outperforms baselines (including those w/ GPT-4V) that leverage pure language as the interface layer on tasks that require reasoning and multi-step behaviors.

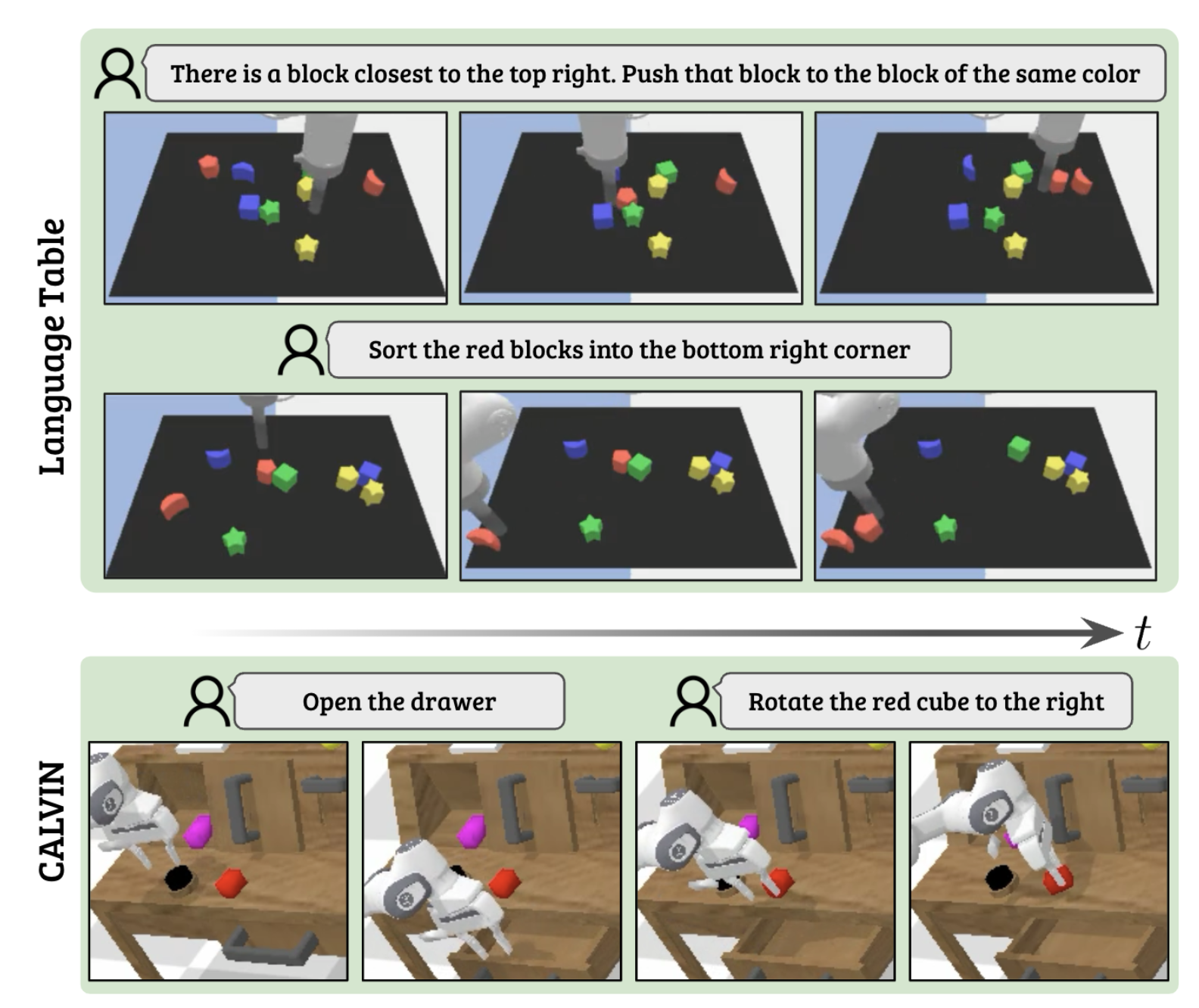

We wish to develop a hierarchical policy architecture that can enable robots to perform a variety of manipulation tasks when provided with free-form language descriptions. Specifically, we seek an architecture that can handle low-level actions for fine-grained or contact-rich tasks (e.g. pushing, 6D object manipulation) while also having the capability to reason and plan without any external step-by-step instructions.

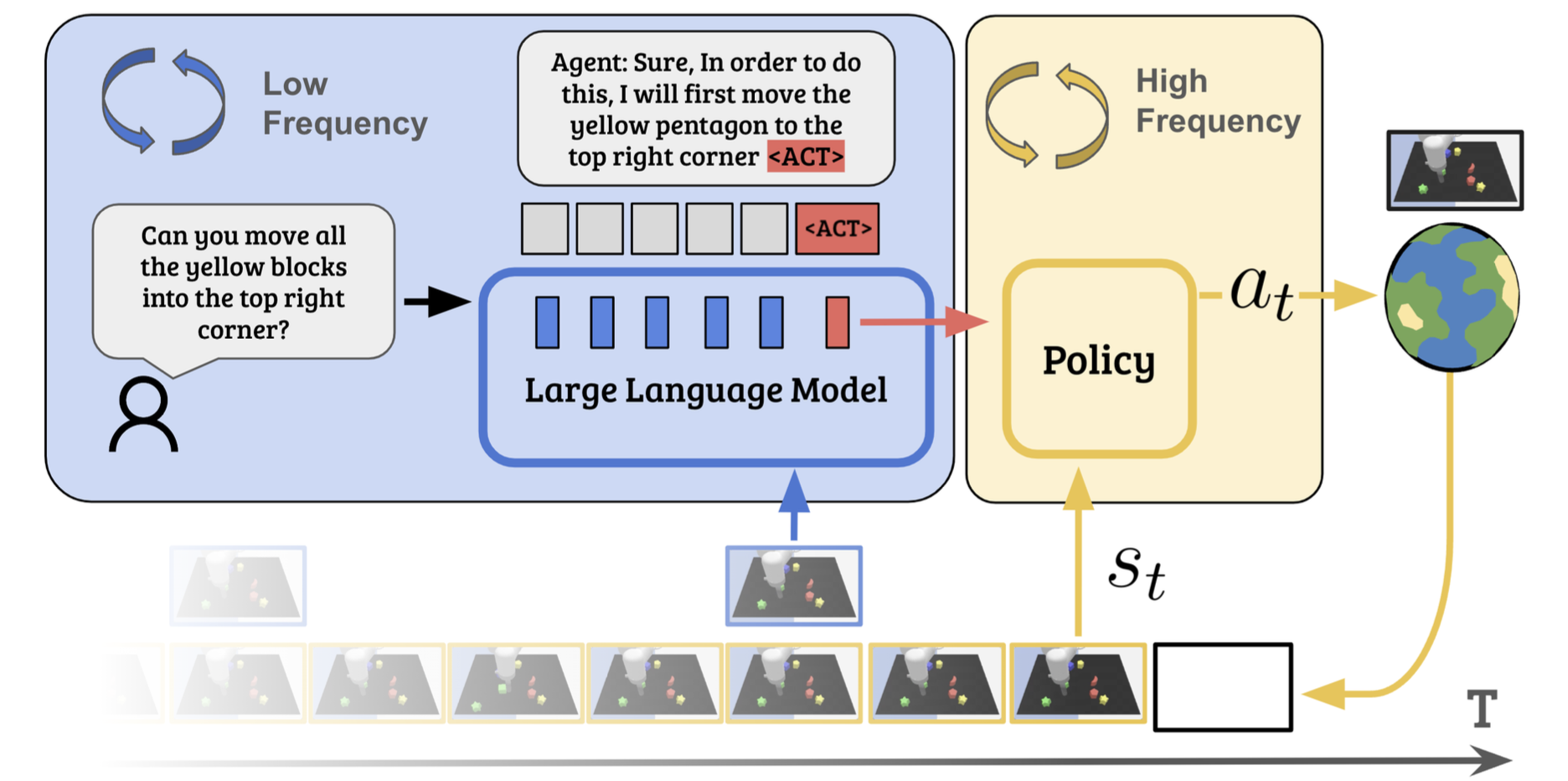

Our key insight is that we can introduce an additional latent code to act as a bridge between the high-level LLM and low-level language conditioned policy. We augment the LLM’s tokenizer by adding a specialized <ACT> token, prompting the model to predict this token in response to actionable questions. The last layer embedding of the <ACT> token is then utilized as a latent goal for the downstream policy network. This learnable <ACT> token’s embedding facilitates the transmission of abstract goals and nuances to the low-level policy — details that are not easily conveyed through language alone.

We systematically evaluated LCB across a diverse set of environments and tasks to demonstrate the efficacy of integrating a pretrained Large Language Model (LLM) with a domain-specific, pretrained low-level policy. Our primary objective was to elucidate the enhanced capabilities of the policy, specifically its advanced high-level language comprehension coupled with nuanced, domain-specific physical awareness. Through our experiment, we aim to answer the following questions:

@misc{shentu2024lcb,

title={From LLMs to Actions: Latent Codes as Bridges in Hierarchical Robot Control},

author={Yide Shentu, Philipp Wu, Aravind Rajeswaran and Pieter Abbeel},

year={2024},

}